Flow4R: Unifying 4D Reconstruction and Tracking with Scene Flow

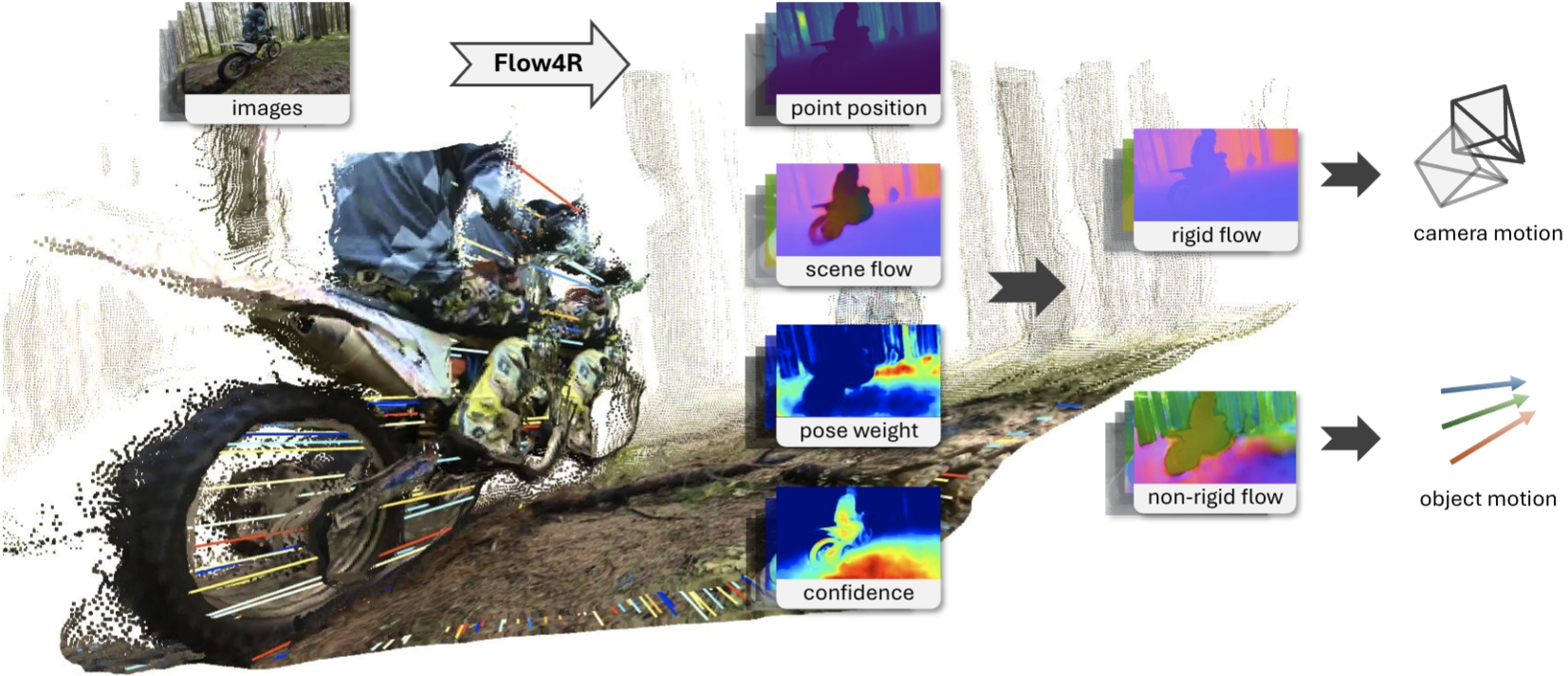

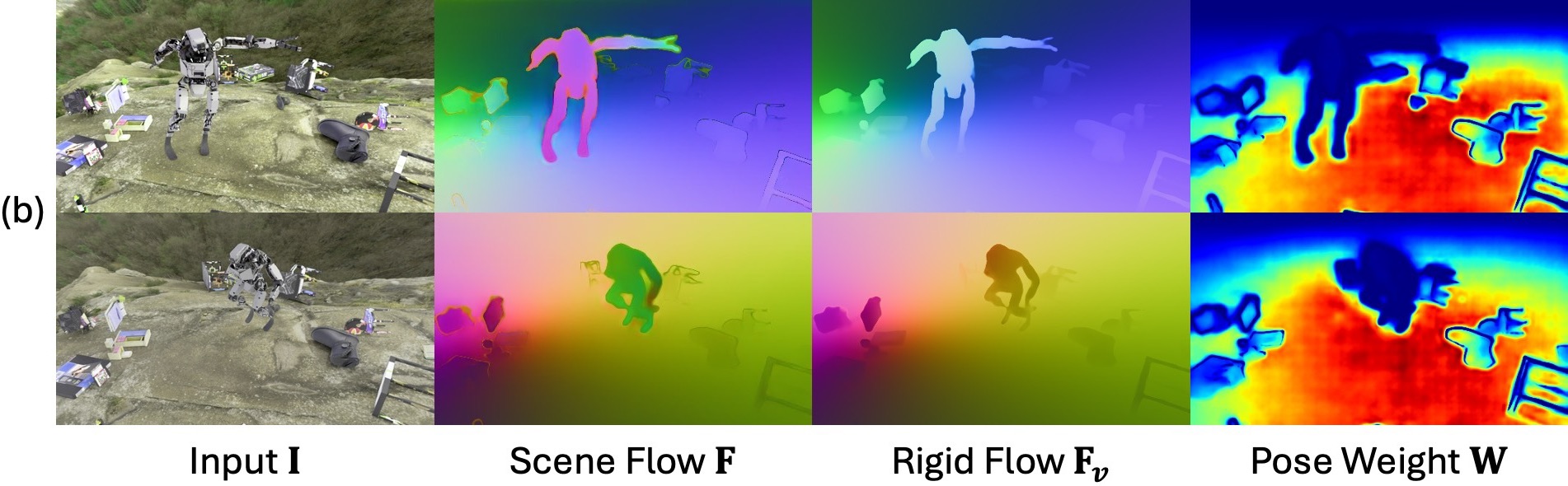

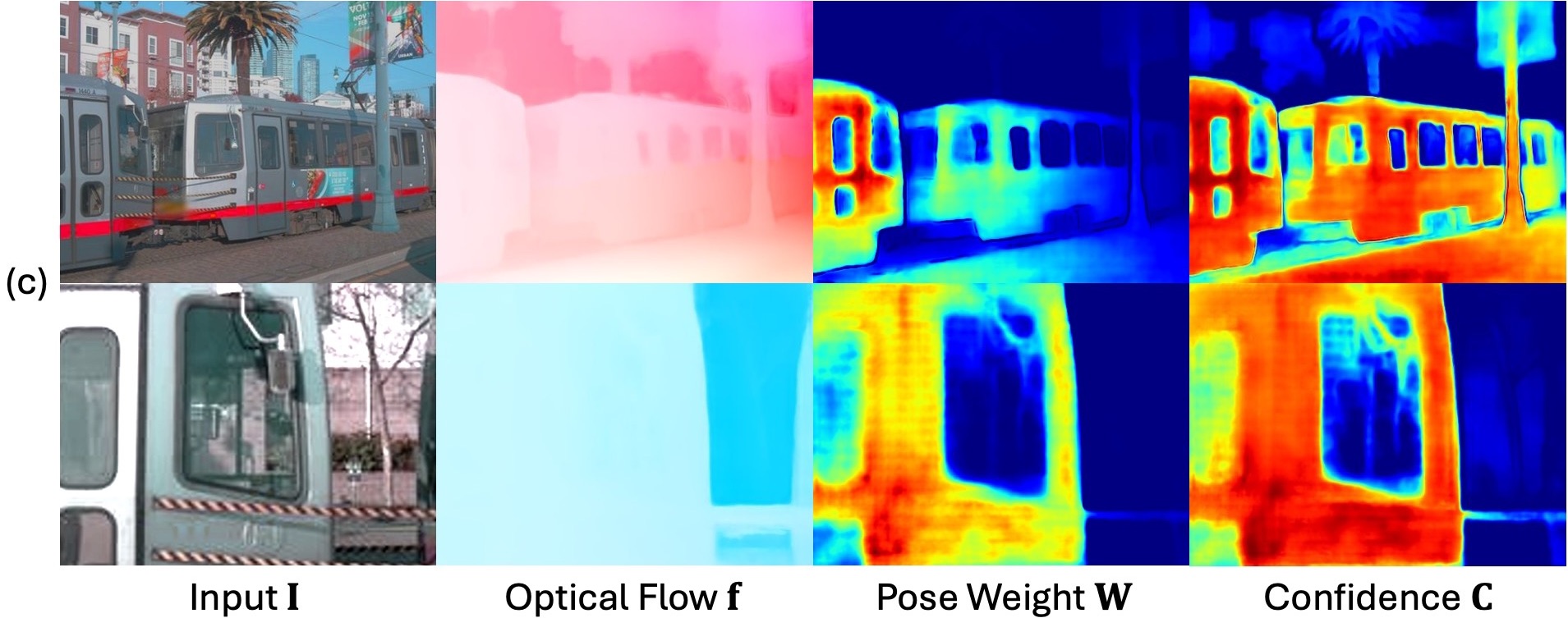

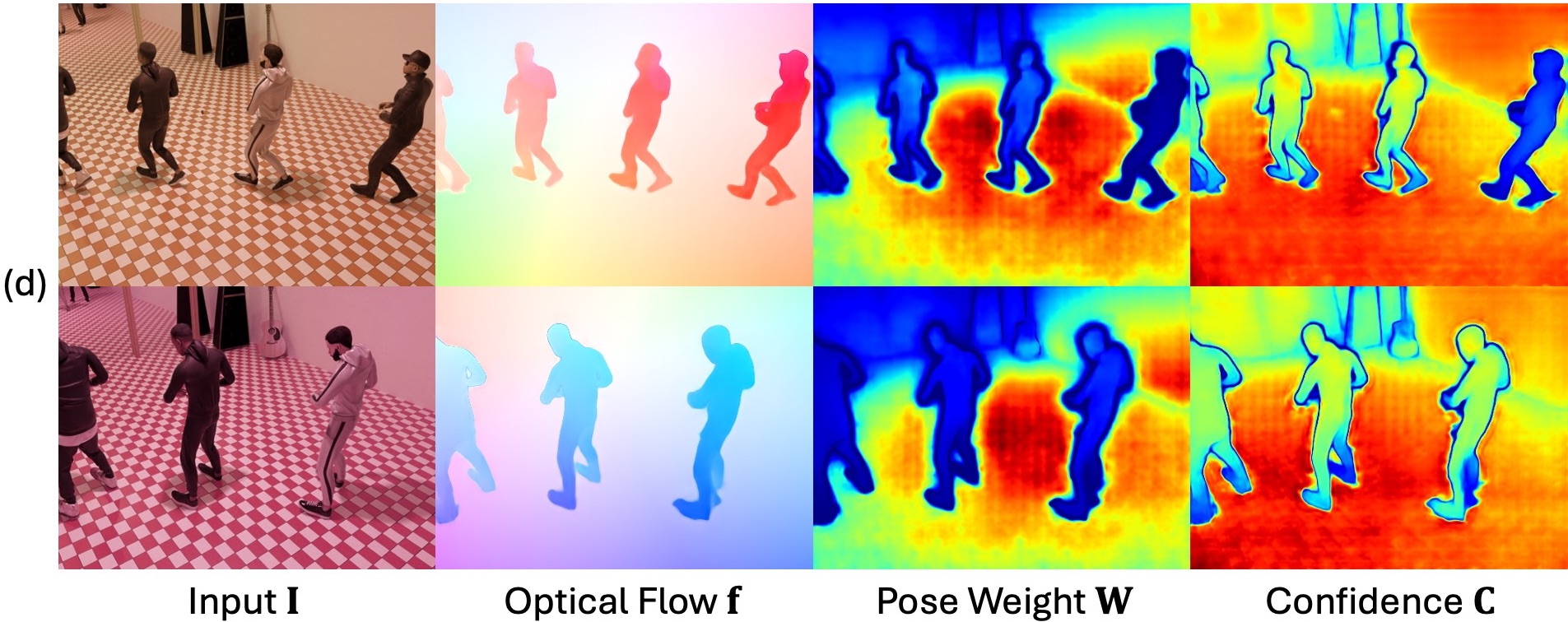

Given each image pair, Flow4R predicts for each image the point position $\mathbf{P}$, scene flow $\mathbf{F}$, pose weight $\mathbf{W}$, and confidence $\mathbf{C}$. Central to our framework, the scene flow $\mathbf{F}$ captures motion of points relative to the camera, thus is independent of the choice of coordinate system. Based on the pose weight $\mathbf{W}$, the scene flow $\mathbf{F}$ can be accurately decomposed into camera motion and object motion, enabling stable reconstruction and flexible tracking in both static and dynamic scenarios.

Abstract

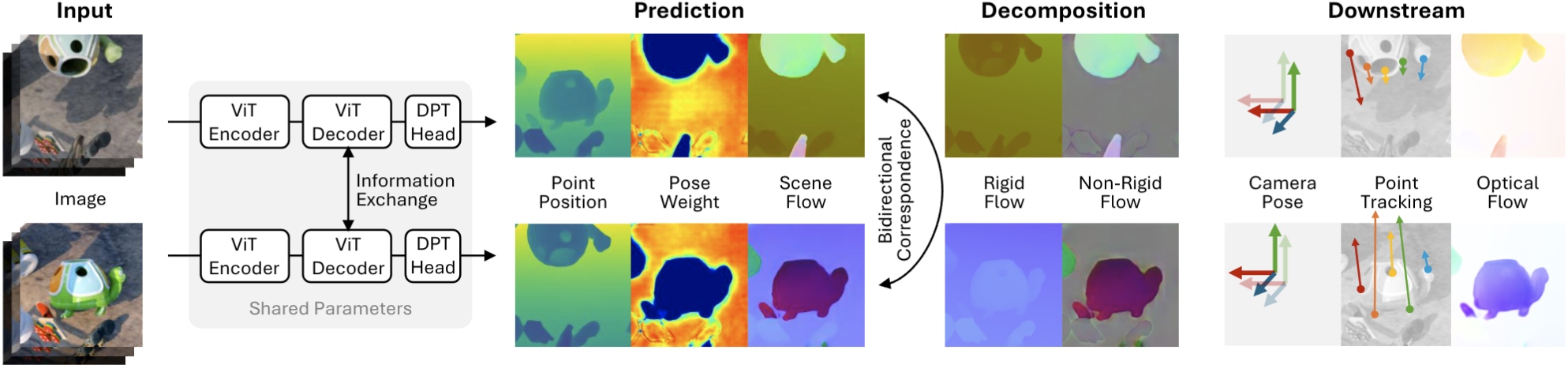

Reconstructing and tracking dynamic 3D scenes remains a fundamental challenge in computer vision. Existing approaches often decouple geometry from motion: multi-view reconstruction methods assume static scenes, while dynamic tracking frameworks rely on explicit camera pose estimation or separate motion models. We propose Flow4R, a unified framework that treats camera-space scene flow as the central representation linking 3D structure, object motion, and camera motion. Flow4R predicts a minimal per-pixel property set—3D point position, scene flow, pose weight, and confidence—from two-view inputs using a Vision Transformer. This flow-centric formulation allows local geometry and bidirectional motion to be inferred symmetrically with a shared decoder in a single forward pass, without requiring explicit pose regressors or bundle adjustment. Trained jointly on static and dynamic datasets, Flow4R achieves state-of-the-art performance on 4D reconstruction and tracking tasks, demonstrating the effectiveness of the flow-central representation for spatiotemporal scene understanding.

Pipeline

Flow4R takes two images as input at a time and predicts a pixel-aligned property set, including point position $\mathbf{P}$, scene flow $\mathbf{F}$, pose weight $\mathbf{W}$, and confidence $\mathbf{C}$ (omitted in this figure), from which various downstream predictions can be deduced.

Evaluation

World Coordinate 3D Point Tracking

We report the performance on four datasets, Aria Digital Twin (ADT), Dynamic Replica (DR), and Point Odyssey (PO), and Panoptic Studio (PS) using the Average Points under Distance (APD3D$\uparrow$) metric for all points and dynamic points after global median alignment. We also compare the model sizes in the last column. The best and second-best results are marked in bold and underlined.

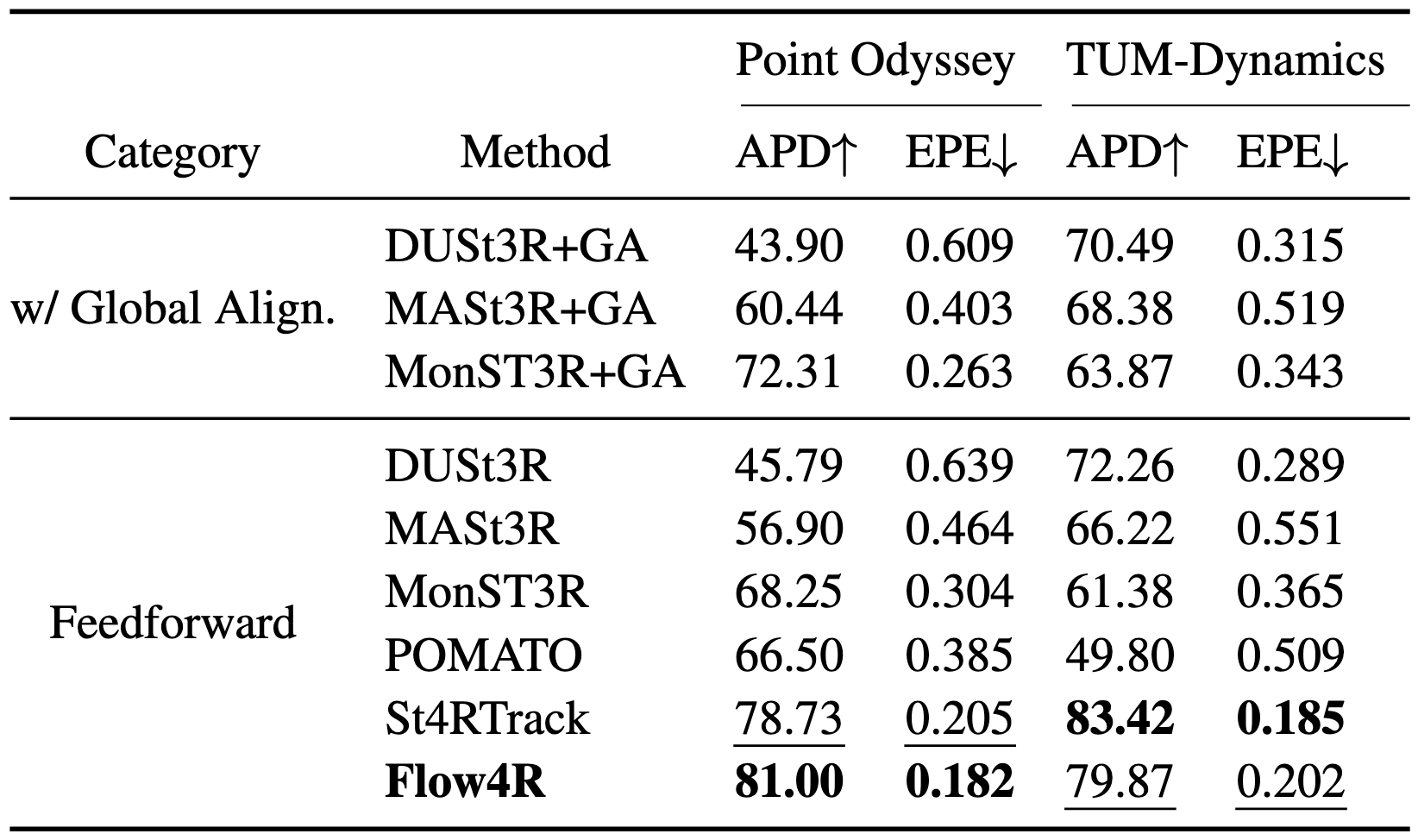

World Coordinate 3D Reconstruction

We report performance on Point Odyssey and TUM-Dynamics after global median scaling. The best and second-best results are marked in bold and underlined.

Visualization

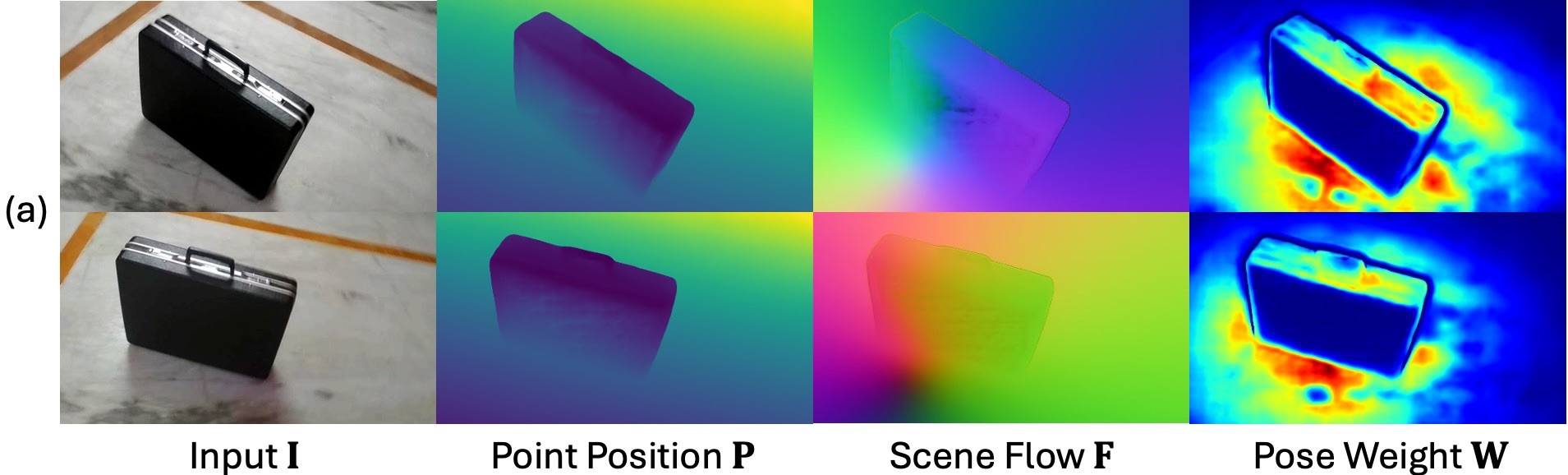

2D Visualization

The point position map $\mathbf{P}$ captures scene geometry in the local space. The scene flow map $\mathbf{F}$ describes how each point moves from the current image to its pair, capturing both camera and object motions. The pose weight map $\mathbf{W}$ indicates which pixels are reliable for camera pose estimation. The confidence map $\mathbf{C}$ indicates the uncertainty of the predictions.

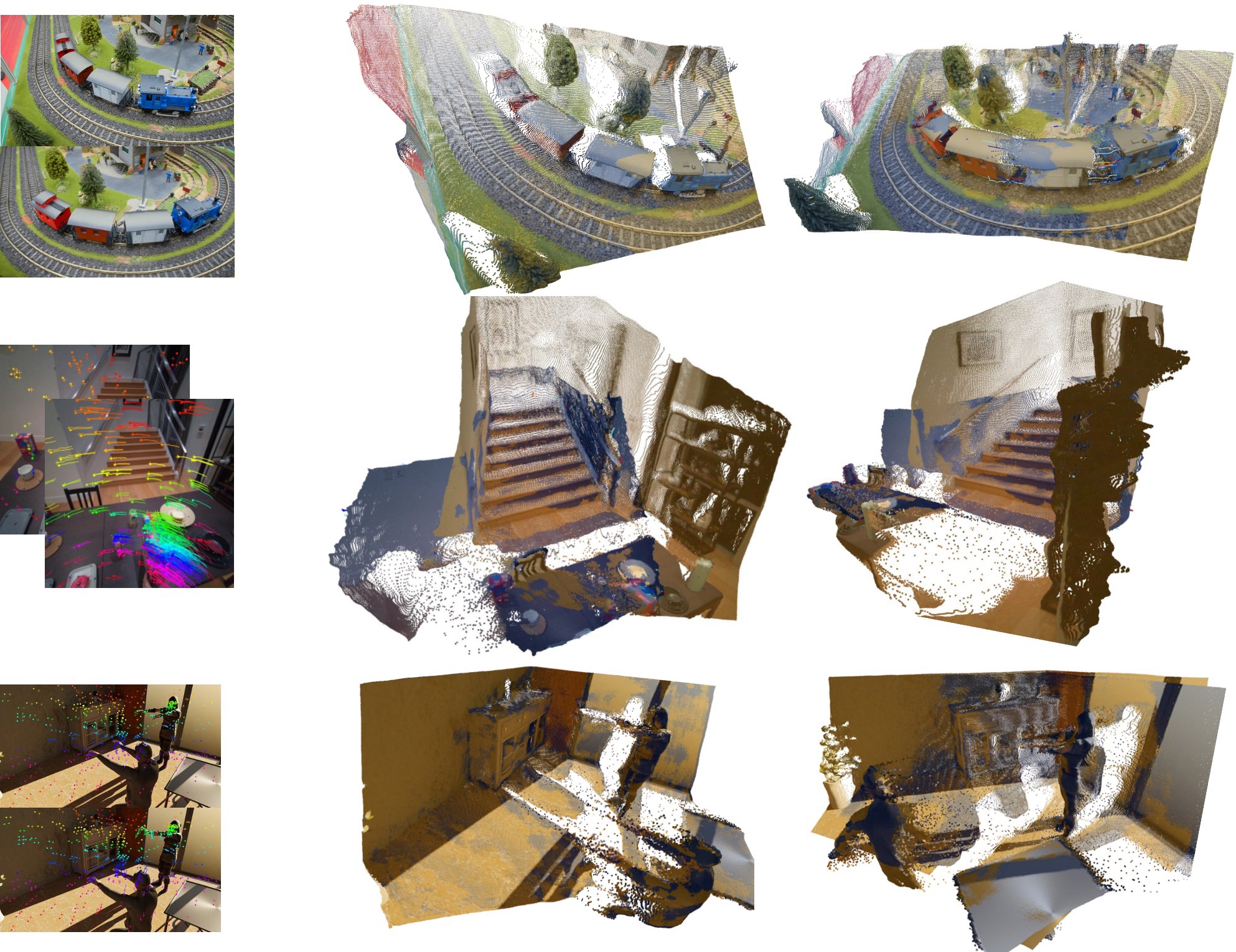

3D Visualization

We render the reconstructed and tracked points within a global coordinate system. Both dynamic elements (such as the train and human figures) and stationary structures (including the tree, ladder, wall, and table) exhibit high 3D consistency, demonstrating the effectiveness of our flow-centric tracking and reconstruction pipeline.

Related Work

Our work is closely related to

- DUSt3R: Geometric 3D Vision Made Easy

- MonST3R: A Simple Approach for Estimating Geometry in the Presence of Motion

- ZeroMSF: Zero-shot Monocular Scene Flow Estimation in the Wild

- Dynamic Point Maps: A Versatile Representation for Dynamic 3D Reconstruction

- St4RTrack: Simultaneous 4D Reconstruction and Tracking in the World

- POMATO: Marrying Pointmap Matching with Temporal Motions for Dynamic 3D Reconstruction

- D²USt3R: Enhancing 3D Reconstruction with 4D Pointmaps for Dynamic Scenes

Moving away from dedicated decoders for specific coordinate systems or timestamps, Flow4R adopts a symmetrical architecture that predicts local geometry and relative motion using a shared decoder and head.